I absolutely didn’t grok Pydantic the first time I had a coworker recommend using it. The big impedance mismatch was that I don’t use a lot of objects in my Python code. Most of my workflows are data transforms that look like:

1. suck data into a data frame 1. do some transforms on that data 1. maybe join to other data 1. get fancy and apply a function to a column in that dataframe 1. dump the result into a database 1. create some graphs or tables from a summary

In a data science or data engineering workflow like that, the only real objects with methods and such are the data frames. And those aren’t my objects. They are Pandas objects. I just pass them around. My experience is that if I try to make my own objects for my type workflows, I just end up with code that’s harder to read and less performant. Neither of whcih interest me. So I just don’t create my own objects in Python.

So when Pydantic was recommended to me I was like, “doesn’t feel like a tool that fits my hand.”

However in this video, Jason Liu lays out a compelling case why Pydantic is the magic glue that allows LLMs to fit inside of software workflows and not randomly break things (much). He lays out a workflow where LLMs return data into Pydantic validator and that helps ensure that the results end up properly parsed and formatted.

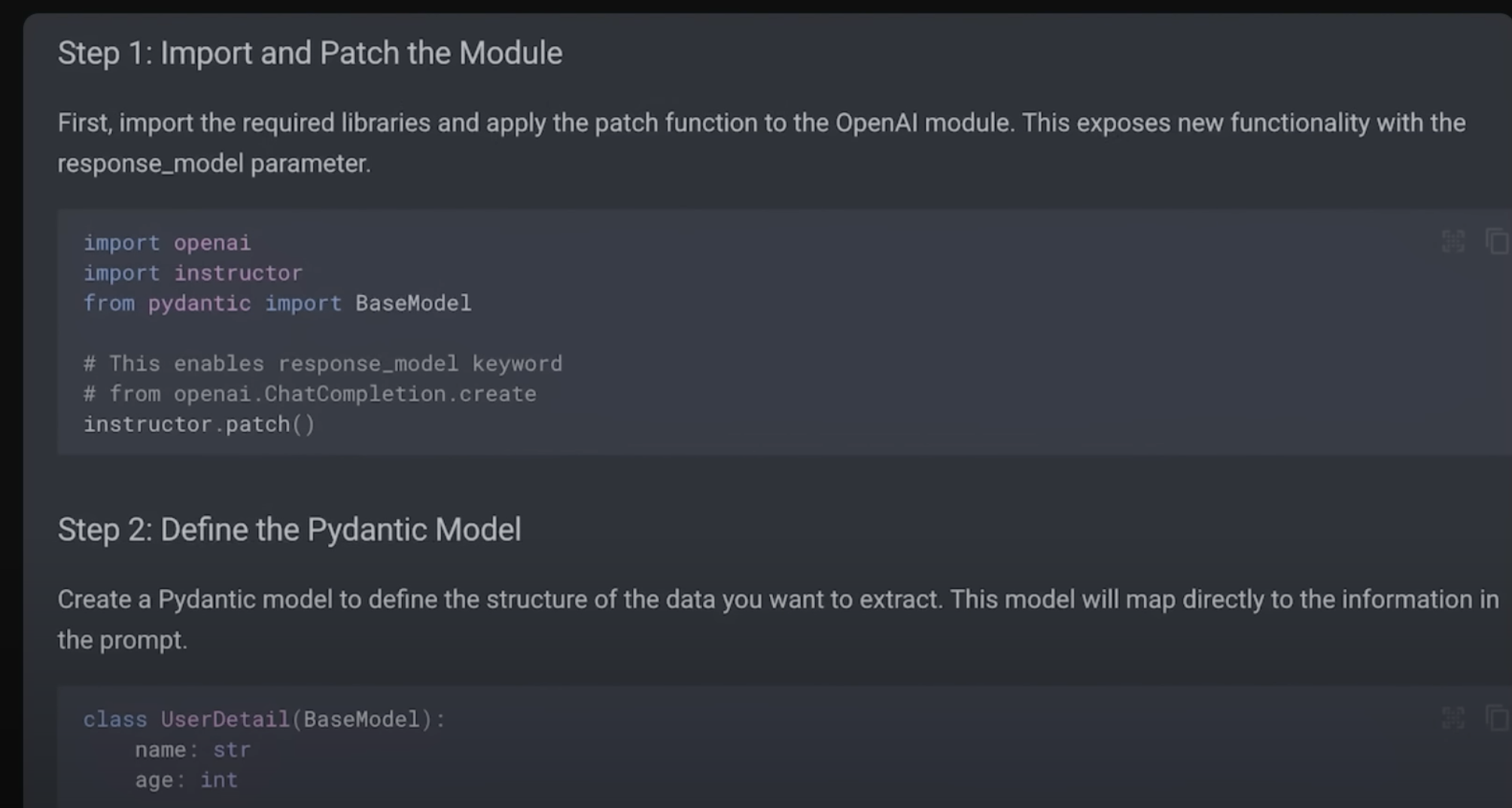

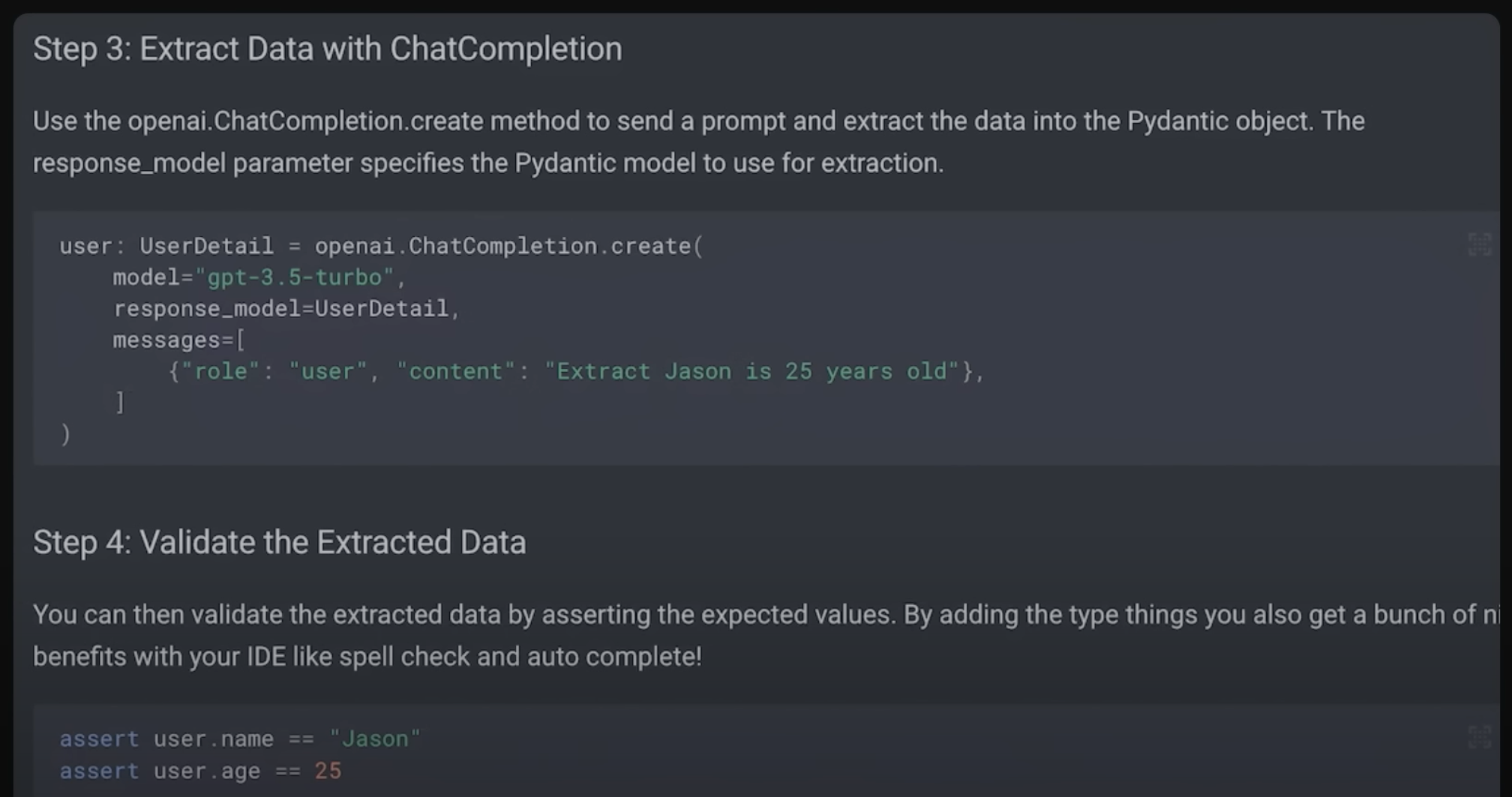

Jason created a light weight library called instructor that wraps these ideas so they are easy to use:

Instructor is used in concert with Open.ai function calling to ensure correctly formatted values are going in and out of the model. Seems like a cool approach.

This is worth watching if you’re interested in calling LLMs with code.